on control variates

A few months ago, I had to write a thesis evaluation of Rémi Leluc’s PhD, which contained several novel Monte Carlo proposals on control variates and importance techniques. For instance, Leluc et al. (Statistics and Computing, 2021) revisits the concept of control variables by adding a perspective of control variable selection using LASSO. This prior selection is relevant since control variables are not necessarily informative about the objective function being integrated and my experience is that the more variables the less reliable the improvement. The remarkable feature of the results is in obtaining explicit and non-asymptotic bounds.

The author obtains a concentration inequality on the error resulting from the use of control variables, under strict assumptions on the variables. The associated numerical experiment illustrates the difficulties of practically implementing these principles due to the number of parameters to calibrate. I found the example of a capture-recapture experiment on ducks (European Dipper) particularly interesting, not only because we had used it in our book but also because it highlights the dependence of estimates on the dominant measure.

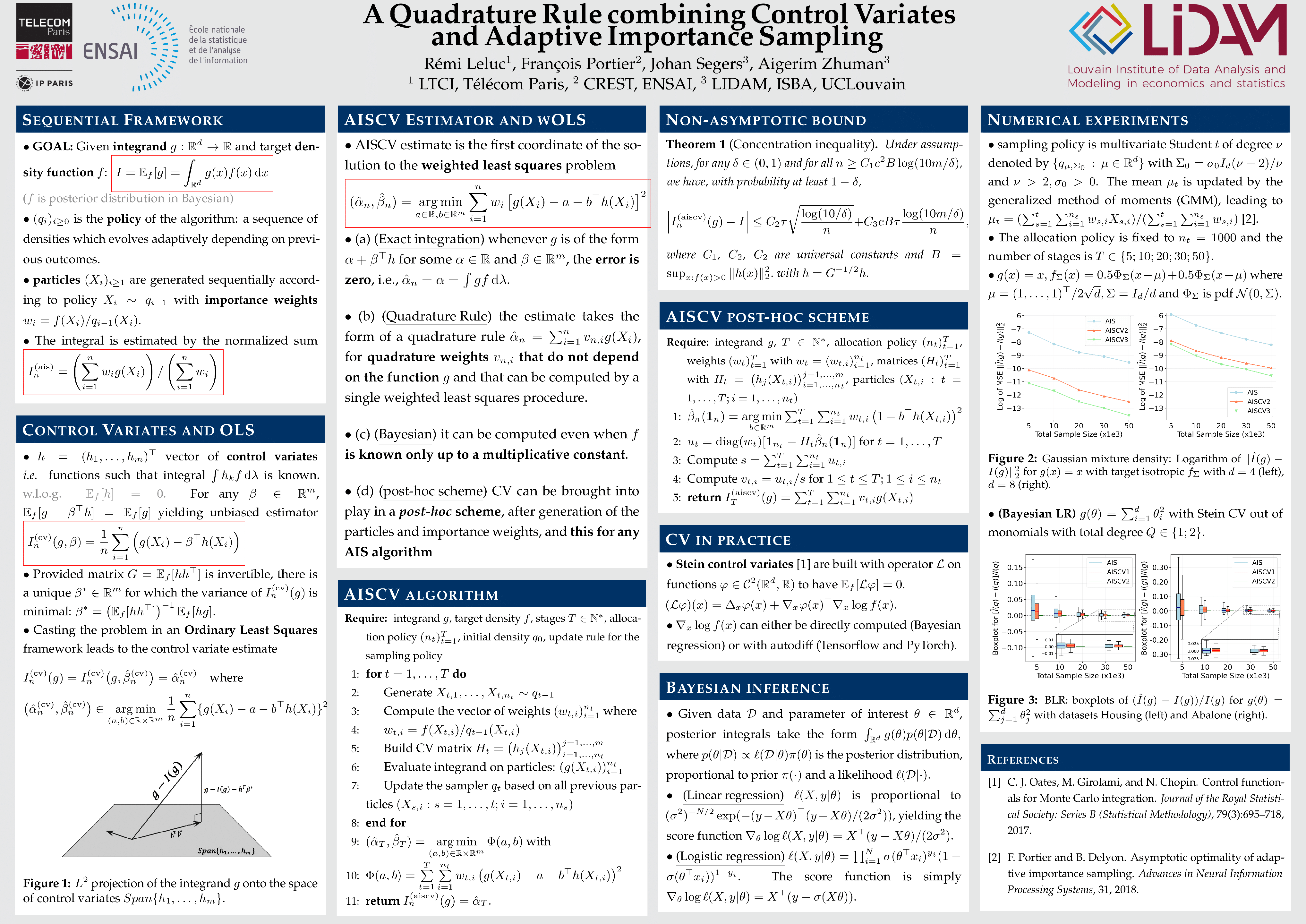

Based on a NeurIPS 2022 poster presentation Chapter 3 is devoted to the use of control variables in sequential Monte Carlo, where a sequence of importance functions is constructed based on previous iterations to improve the approximation of the target distribution. Under relatively strong assumptions of importance functions dominating the target distribution (which could generally be achieved by using an increasing fraction of the data in a partial posterior distribution), of sub-Gaussian tails of an intractable distribution’s residual, a concentration inequality is established for the adaptive control variable estimator.

This chapter uses a different family of control variables, based on a Stein operator introduced in Mira et al. (2016). In the case where the target is a mixture in IRd, one of our benchmarks in Cappé et al. (2008), remarkable gains are obtained for relatively high dimensions. While the computational demands of these improvements are not mentioned, the comparison with an MCMC approach (NUTS) based on the same number of particles demonstrates a clear improvement in Bayesian estimation.

Chapter 4 corresponds to a very recent arXival and presents a very original approach to control variate correction by reproducing the interest rate law through an approximation using the closest neighbor (leave-one-out) method. It requires neither control function nor necessarily additional simulation, except for the evaluation of the integral, which is rather remarkable, forming a kind of parallel with the bootstrap. (Any other approximation of the distribution would also be acceptable if available at the same computational cost.) The thesis aims to establish the convergence of the method when integration is performed by a Voronoi tessellation, which leads to an optimal rate of order n-1-2/d for quadratic error (under conditions of integrand regularity). In the alternative where the integral must be evaluated by Monte Carlo, this optimality disappears, unless a massive amount of simulations are used. Numerical illustrations cover SDEs and a Bayesian hierarchical modeling already used in Oates et al. (2017), with massive gain in both cases.

Leave a comment