My PhD student Charly Andral [presented at the mostly Monte Carlo seminar and] arXived a new preprint yesterday, on training a normalizing flow network as an importance sampler (as in Gabrié et al.) or an independent Metropolis proposal, and exploiting its invertibility to call quasi-Monte Carlo low discrepancy sequences to boost its efficiency. (Training the flow is not covered by the paper.) This extends the recent study of He et al. (which was presented at MCM 2023 in Paris) to the normalising flow setting. In the current experiments, the randomized QMC samples are computed using the SciPy package (Roy et al. 2023), where the Sobol’ sequence is based on Joe and Kuo (2008) and on Matouˇsek (1998) for the scrambling, and where the Halton sequence is based on Owen (2017). (No pure QMC was harmed in the process!) The flows are constructed using the package FlowMC. As expected the QMC version brings a significant improvement in the quality of the Monte Carlo approximations, for equivalent computing times, with however a rapid decrease in the efficiency as the dimension of the targetted distribution increases. On the other hand, the architecture of the flow demonstrates little relevance. And the type of RQMC sequence makes a difference, the advantage apparently going to a scrambled Sobol’ sequence.

My PhD student Charly Andral [presented at the mostly Monte Carlo seminar and] arXived a new preprint yesterday, on training a normalizing flow network as an importance sampler (as in Gabrié et al.) or an independent Metropolis proposal, and exploiting its invertibility to call quasi-Monte Carlo low discrepancy sequences to boost its efficiency. (Training the flow is not covered by the paper.) This extends the recent study of He et al. (which was presented at MCM 2023 in Paris) to the normalising flow setting. In the current experiments, the randomized QMC samples are computed using the SciPy package (Roy et al. 2023), where the Sobol’ sequence is based on Joe and Kuo (2008) and on Matouˇsek (1998) for the scrambling, and where the Halton sequence is based on Owen (2017). (No pure QMC was harmed in the process!) The flows are constructed using the package FlowMC. As expected the QMC version brings a significant improvement in the quality of the Monte Carlo approximations, for equivalent computing times, with however a rapid decrease in the efficiency as the dimension of the targetted distribution increases. On the other hand, the architecture of the flow demonstrates little relevance. And the type of RQMC sequence makes a difference, the advantage apparently going to a scrambled Sobol’ sequence.

Archive for Metropolis-Hastings algorithm

combining normalizing flows and QMC

Posted in Books, Kids, Statistics with tags Arianna Rosenbluth, arXiv, Illya Sobol, importance sampling, inverse cdf, John Halton, MCM 2023, Metropolis-Hastings algorithm, mostly Monte Carlo seminar, normalizing flow, Python, quasi-Monte Carlo methods, scrambling, Sobol sequences on January 23, 2024 by xi'anreheated vanilla Rao-Blackwellisation

Posted in Kids, Statistics, University life, Books with tags Cross Validation, Markov chains, MCMC, Metropolis-Hastings algorithm, Rao-Blackwellisation on December 18, 2023 by xi'an Over the weekend, I came across a X validated question asking for clarification about our 2012 Vanilla Rao-Blackwellisation paper with Randal. Question written in a somewhat formal style that made our work difficult to recognise… At least for yours truly.

Over the weekend, I came across a X validated question asking for clarification about our 2012 Vanilla Rao-Blackwellisation paper with Randal. Question written in a somewhat formal style that made our work difficult to recognise… At least for yours truly.

Interestingly this led another (major) contributor to X validation to work out an uncompleted illustration as attached, when the target distribution is (1-x)². It seems strange to me that the basics of the method proves such a difficulty to fathom, given that it is a simple integration of the (actual and virtual) uniforms…. The point of the OP that the improvement brought by Rao-Blackwellisation is only conditional on the accepted values is correct, though.

Exact MCMC with differentially private moves

Posted in Statistics with tags data privacy, differential privacy, MCMC, Metropolis-Hastings algorithm, penalty algorithm, private data, pseudo-marginal MCMC, Statistics and Computing on September 25, 2023 by xi'an“The algorithm can be made differentially private while remaining exact in the sense that its target distribution is the true posterior distribution conditioned on the private data (…) The main contribution of this paper arises from the simple observation that the penalty algorithm has a built-in noise in its calculations which is not desirable in any other context but can be exploited for data privacy.”

Another privacy paper by Yldirim and Ermis (in Statistics and Computing, 2019) on how MCMC can ensure privacy. For free. The original penalty algorithm of Ceperley and Dewing (1999) is a form of Metropolis-Hastings algorithm where the Metropolis-Hastings acceptance probability is replaced with an unbiased estimate (e.g., there exists an unbiased and Normal estimate of the log-acceptance ratio, λ(θ, θ’), whose exponential can be corrected to remain unbiased). In that case, the algorithm remains exact.

“Adding noise to λ(θ, θ) may help with preserving some sort of data privacy in a Bayesian framework where [the posterior], hence λ(θ, θ), depends on the data.”

Rather than being forced into replacing the Metropolis-Hastings acceptance probability with an unbiased estimate as in pseudo-marginal MCMC, the trick here is in replacing λ(θ, θ’) with a Normal perturbation, hence preserving both the target (as shown by Ceperley and Dewing (1999)) and the data privacy, by returning a noisy likelihood ratio. Then, assuming that the difference sensitivity function for the log-likelihood [the maximum difference c(θ, θ’) over pairs of observations of the difference between log-likelihoods at two arbitrary parameter values θ and θ’] is decreasing as a power of the sample size n, the penalty algorithm is differentially private, provided the variance is large enough (in connection with c(θ, θ’)] after a certain number of MCMC iterations. Yldirim and Ermis (2019) show that the setting covers the case of distributed, private, data. even though the efficiency decreases with the number of (protected) data silos. (Another drawback is that the data owners must keep exchanging likelihood ratio estimates.

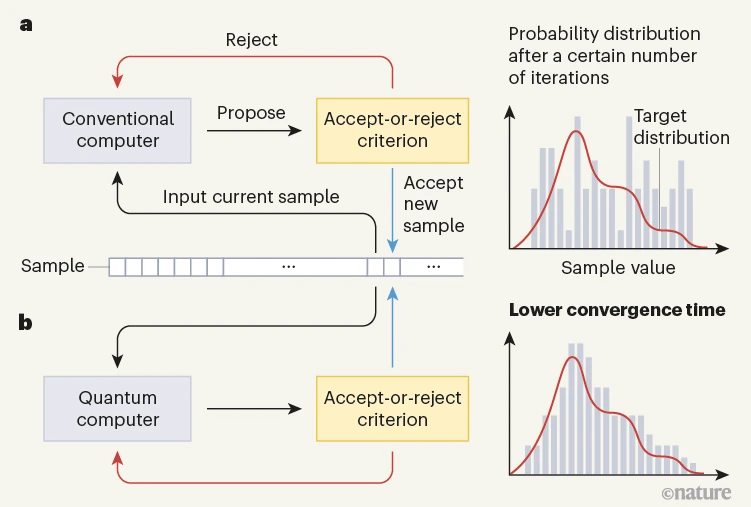

Quantum-enhanced Markov chain Monte Carlo

Posted in Books, Statistics with tags Arianna Rosenbluth, Ising model, MCMC, Metropolis-Hastings algorithm, Nature, perfect sampling on September 2, 2023 by xi'an A rare occurrence of an MCMC paper in Nature!!! David Layden and co-authors published this paper on 12 July, about using a quantum proposal in a Metropolis-Rosenbluth-Hastings simulation of an Ising model. More specifically, based on “quenched dynamics of a transverse-field quantum Ising model20, which can be efficiently simulated on a quantum computer21“, which amounts to using a Hamiltonian proposal. I tried to dig through the supplementary material to understand the implementation and the requirement for a quantum computer, but failed… (The picture below is from the News & Views tribune on the paper. It does not help.) The above illustrates the ability of the algorithm to explore more efficiently likely low energy configurations of the Ising model when compared with standard solutions, although I could not fathom if the time cost of resorting to the quantum computer for the former was accounted for.

A rare occurrence of an MCMC paper in Nature!!! David Layden and co-authors published this paper on 12 July, about using a quantum proposal in a Metropolis-Rosenbluth-Hastings simulation of an Ising model. More specifically, based on “quenched dynamics of a transverse-field quantum Ising model20, which can be efficiently simulated on a quantum computer21“, which amounts to using a Hamiltonian proposal. I tried to dig through the supplementary material to understand the implementation and the requirement for a quantum computer, but failed… (The picture below is from the News & Views tribune on the paper. It does not help.) The above illustrates the ability of the algorithm to explore more efficiently likely low energy configurations of the Ising model when compared with standard solutions, although I could not fathom if the time cost of resorting to the quantum computer for the former was accounted for.

“In experiments, our quantum algorithm converged in fewer iterations than common classical MCMC alternatives, suggesting unusual robustness to noise”

While this paper is a significant foray into quantum MCMC, its target is the modest Ising model for n=10 nodes, with very special features that seem to contribute to the construction of the proposal. A model that can be exactly simulated, either directly for that size or by perfect sampling à la Propp & Wilson for larger n’s. And whose discretisation is not too far from the model considered by Metropolis, [mostly] the Rosenbluths, and the Tellers in the 1950’s. It thus remains to see how extensions can be built for more realistic targets.

exact yet private MCMC

Posted in Statistics with tags Arrowleaf Cellars, differential privacy, ergodicity, ICML 2023, Lake Okanagan, MCMC, Metropolis-Hastings algorithm, Okanagan vineyards, Poisson subsampling, reversibility, spectral gap, stationarity on August 9, 2023 by xi'an

“at each iteration, DP-fast MH first samples a minibatch size and checks if it uses a minibatch of data or full-batch data. Then it checks whether to require additional Gaussian noise. If so, it will instantiate the Gaussian mechanism which adds Gaussian noise to the energy difference function. Finally, it chooses accept or reject θ′ based on the noisy acceptance probability.”

Private, Fast, and Accurate Metropolis-Hastings for Large-Scale Bayesian Inference is an(other) ICML²³ paper, written by Wanrong Zhang and Ruqi Zhang. Who are running MCMC under DP constraints. For one thing, they compute the MH acceptance probability with a minibatch, which is Poisson sampled (in order to guarantee privacy). It appears as a highly calibrated algorithm (see, e.g., Algorithm 1). Under the assumption (1) that the difference between individual log densities for two values of the parameter is upper bounded (in the data), differential privacy is established as failing to detect for certain a datapoint from the MCMC output. Interestingly, the usual randomisation leading to pricacy is operated on the energy level, rather than on observations or summary statistics, although this may prove superfluous when there is enough randomness provided by the MH step itself: “inherent privacy guarantees in the MH algorithm”

“when either the privacy hyperparameter ϵ or δ becomes small, the convergence rate becomes small, characterizing how much the privacy constraint slows down the convergence speed of the Markov chain”

The major results of the paper are privacy guarantees (at each iteration) and preservation of the proper target distribution, in contrast with earlier versions. In particular, adding the Gaussian noise to the energy does not impact reversibility. (Even though I am not 100% sure I buy the entire argument about reversibility (in Appendix C) as it sounds too easy!) The authors even achieve a bound on the relative spectral gaps.