Archive for Monte Carlo methods

mostly MC [April]

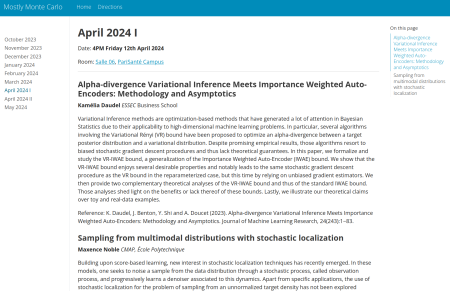

Posted in Books, Kids, Statistics, University life with tags #ERCSyG, Bayesian computational methods, Bayesian inference, denoising, generative models, Institut PR[AI]RIE, JMLR, machine learning, Markov chain Monte Carlo, MCMC, Monte Carlo methods, Monte Carlo Statistical Methods, mostly Monte Carlo seminar, multimodal target, Ocean, optimization, Paris, PariSanté campus, PSC, sampling, score-based generative models, seminar, simulation, stochastic diffusions, stochastic localization, variational autoencoders on April 5, 2024 by xi'anmostly M[ar]C[h]

Posted in Books, Kids, Statistics, University life with tags diffusions, generative modelling, generative models, Monte Carlo methods, Monte Carlo Statistical Methods, mostly Monte Carlo seminar, normalizing flow, optimal transport, optimization, Paris, PariSanté campus, push-forward distribution, seminar, stochastic diffusions, The Prairie Chair on February 27, 2024 by xi'anmostly MC[bruary]

Posted in Books, Kids, Statistics, University life with tags completion, contour algorithm, Gibbs sampling, insufficiency, IQR, mad, manifold, MCMC, median, Monte Carlo methods, Monte Carlo Statistical Methods, mostly Monte Carlo seminar, Paris, PariSanté campus, sample median, seminar, The Prairie Chair on February 18, 2024 by xi'an