On my last trip to Warwick, the local (RER) train I boarded broke on its way to the CDG airport, after hitting something in a tunnel just three stops short of the airport, with so much delay and misleading communication that I missed my flight. While a minor issue for me, since I managed to work (and blog) in an airport lounge for most of the day—where I crossed path with Numerobis—, while waiting for the only flight to B’ham, this made me to reflect anew on the very poor state of the transportation network in Paris and its suburbs, with such incidents (power failures, broken rails, vetust engines, stolen cables, idiots on the tracks, &tc., even without mentioning the strikes) more and more the norm. And to wonder at how the ancient and bursting network is going to cope with the incoming flow of visitors attending the Olympics this summer… Actually, when compared with the other cities with a fairly reasonably efficient airport connection I experienced, it remains a mystery to me why the Greater Paris conurbation—whose president, Valérie Pécresse, was apparently deemed the main culprit for our train break by an incensed fellow passenger that morning!—has kept for years postponing the construction of a dedicated rail line between the airport and central Paris, as the unpredictable and uncomfortable suburban train is not delivering the intended message to Paris visitors and their still-growing contribution to the French GNP. But with the growing public opposition to any new infrastructure, incl. trains, this is unlikely to happen!

On my last trip to Warwick, the local (RER) train I boarded broke on its way to the CDG airport, after hitting something in a tunnel just three stops short of the airport, with so much delay and misleading communication that I missed my flight. While a minor issue for me, since I managed to work (and blog) in an airport lounge for most of the day—where I crossed path with Numerobis—, while waiting for the only flight to B’ham, this made me to reflect anew on the very poor state of the transportation network in Paris and its suburbs, with such incidents (power failures, broken rails, vetust engines, stolen cables, idiots on the tracks, &tc., even without mentioning the strikes) more and more the norm. And to wonder at how the ancient and bursting network is going to cope with the incoming flow of visitors attending the Olympics this summer… Actually, when compared with the other cities with a fairly reasonably efficient airport connection I experienced, it remains a mystery to me why the Greater Paris conurbation—whose president, Valérie Pécresse, was apparently deemed the main culprit for our train break by an incensed fellow passenger that morning!—has kept for years postponing the construction of a dedicated rail line between the airport and central Paris, as the unpredictable and uncomfortable suburban train is not delivering the intended message to Paris visitors and their still-growing contribution to the French GNP. But with the growing public opposition to any new infrastructure, incl. trains, this is unlikely to happen!

Archive for Paris

travel woes

Posted in Travel, University life with tags Astérix et Obélix, Birmingham, CDG, De Gaulle airport, French politics, GNP, Jamel Debbouze, mass tourism, Paris, Paris 2024 Olympics, Paris suburbs, public transportation, RER B, SNCF, tourism nuisances, train travel, University of Warwick on April 29, 2024 by xi'ansimulation as optimization [by kernel gradient descent]

Posted in Books, pictures, Statistics, University life with tags ABC, biking, Charles Stein, CREST, diffusions, discrepancies, Edo, Gare de Lyon, gradient descent, Hiroshige, INRIA, kernel Stein discrepancy descent, Kullback-Leibler divergence, maximum mean discrepancy, MCMC, Mokaplan, mollified discrepancy, New York city, One Hundred Famous Views of Edo, optimal transport, optimisation, Paris, simulation, SMC, Stein kernel on April 13, 2024 by xi'an Yesterday, which proved an unseasonal bright, warm, day, I biked (with a new wheel!) to the east of Paris—in the Gare de Lyon district where I lived for three years in the 1980’s—to attend a Mokaplan seminar at INRIA Paris, where Anna Korba (CREST, to which I am also affiliated) talked about sampling through optimization of discrepancies.

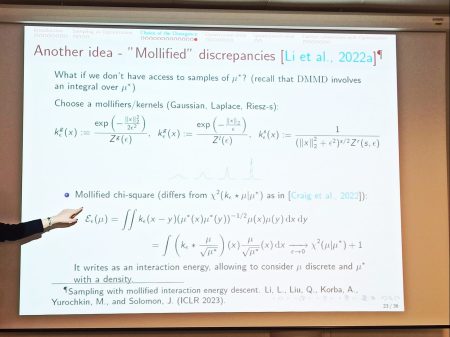

Yesterday, which proved an unseasonal bright, warm, day, I biked (with a new wheel!) to the east of Paris—in the Gare de Lyon district where I lived for three years in the 1980’s—to attend a Mokaplan seminar at INRIA Paris, where Anna Korba (CREST, to which I am also affiliated) talked about sampling through optimization of discrepancies.

This proved a most formative hour as I had not seen this perspective earlier (or possibly had forgotten about it). Except through some of the talks at the Flatiron Institute on Transport, Diffusions, and Sampling last year. Incl. Marilou Gabrié’s and Arnaud Doucet’s.

This proved a most formative hour as I had not seen this perspective earlier (or possibly had forgotten about it). Except through some of the talks at the Flatiron Institute on Transport, Diffusions, and Sampling last year. Incl. Marilou Gabrié’s and Arnaud Doucet’s.

The concept behind remains attractive to me, at least conceptually, since it consists in approximating the target distribution, known up to a constant (a setting I have always felt standard simulation techniques was not exploiting to the maximum) or through a sample (a setting less convincing since the sample from the target is already there), via a sequence of (particle approximated) distributions when using the discrepancy between the current distribution and the target or gradient thereof to move the particles. (With no randomness in the Kernel Stein Discrepancy Descent algorithm.)

The concept behind remains attractive to me, at least conceptually, since it consists in approximating the target distribution, known up to a constant (a setting I have always felt standard simulation techniques was not exploiting to the maximum) or through a sample (a setting less convincing since the sample from the target is already there), via a sequence of (particle approximated) distributions when using the discrepancy between the current distribution and the target or gradient thereof to move the particles. (With no randomness in the Kernel Stein Discrepancy Descent algorithm.)

Ana Korba spoke about practically running the algorithm, as well as about convexity properties and some convergence results (with mixed performances for the Stein kernel, as opposed to SVGD). I remain definitely curious about the method like the (ergodic) distribution of the endpoints, the actual gain against an MCMC sample when accounting for computing time, the improvement above the empirical distribution when using a sample from π and its ecdf as the substitute for π, and the meaning of an error estimation in this context.

Ana Korba spoke about practically running the algorithm, as well as about convexity properties and some convergence results (with mixed performances for the Stein kernel, as opposed to SVGD). I remain definitely curious about the method like the (ergodic) distribution of the endpoints, the actual gain against an MCMC sample when accounting for computing time, the improvement above the empirical distribution when using a sample from π and its ecdf as the substitute for π, and the meaning of an error estimation in this context.

“exponential convergence (of the KL) for the SVGD gradient flow does not hold whenever π has exponential tails and the derivatives of ∇ log π and k grow at most at a polynomial rate”

mostly MC [April]

Posted in Books, Kids, Statistics, University life with tags #ERCSyG, Bayesian computational methods, Bayesian inference, denoising, generative models, Institut PR[AI]RIE, JMLR, machine learning, Markov chain Monte Carlo, MCMC, Monte Carlo methods, Monte Carlo Statistical Methods, mostly Monte Carlo seminar, multimodal target, Ocean, optimization, Paris, PariSanté campus, PSC, sampling, score-based generative models, seminar, simulation, stochastic diffusions, stochastic localization, variational autoencoders on April 5, 2024 by xi'anlecturing in Collège

Posted in Books, Kids, pictures, Statistics, University life with tags 1530, ABC, Collège de France, Docet omnia, France, La Sorbone, Leuven, Louvain, Marguerite de Navarre, Master program, Paris, Rabelais, random sampling, Statistical learning, Université de Paris on March 28, 2024 by xi'an A few weeks ago I gave a seminar on ABC (and its asymptotics) in the beautiful amphitheatre Marguerite de Navarre (a writer, a thinker, and a protector of writers like Rabelais and of reformist catholics, as well as th sister of the Collège founder, François I) of Collège de France, as complement of the lecture of that week by Stéphane Mallat, who is teaching this year on Learning and random sampling. In this lecture, Stéphane introduced Metropolis-Hastings as one can guess from the blackboards above! The amphitheatre was quite full since master students from several Parisian universities are following the course, along the “general public” since the first principle of the courses delivered at Collège de France is that they are open to everyone, free of charge and without preliminary registration! (As a countermeasure to the monopoly of the university of Paris, following the earlier example of the 1518 trilingual college of Louvain). Here are the slides I partly covered in the lecture.

A few weeks ago I gave a seminar on ABC (and its asymptotics) in the beautiful amphitheatre Marguerite de Navarre (a writer, a thinker, and a protector of writers like Rabelais and of reformist catholics, as well as th sister of the Collège founder, François I) of Collège de France, as complement of the lecture of that week by Stéphane Mallat, who is teaching this year on Learning and random sampling. In this lecture, Stéphane introduced Metropolis-Hastings as one can guess from the blackboards above! The amphitheatre was quite full since master students from several Parisian universities are following the course, along the “general public” since the first principle of the courses delivered at Collège de France is that they are open to everyone, free of charge and without preliminary registration! (As a countermeasure to the monopoly of the university of Paris, following the earlier example of the 1518 trilingual college of Louvain). Here are the slides I partly covered in the lecture.