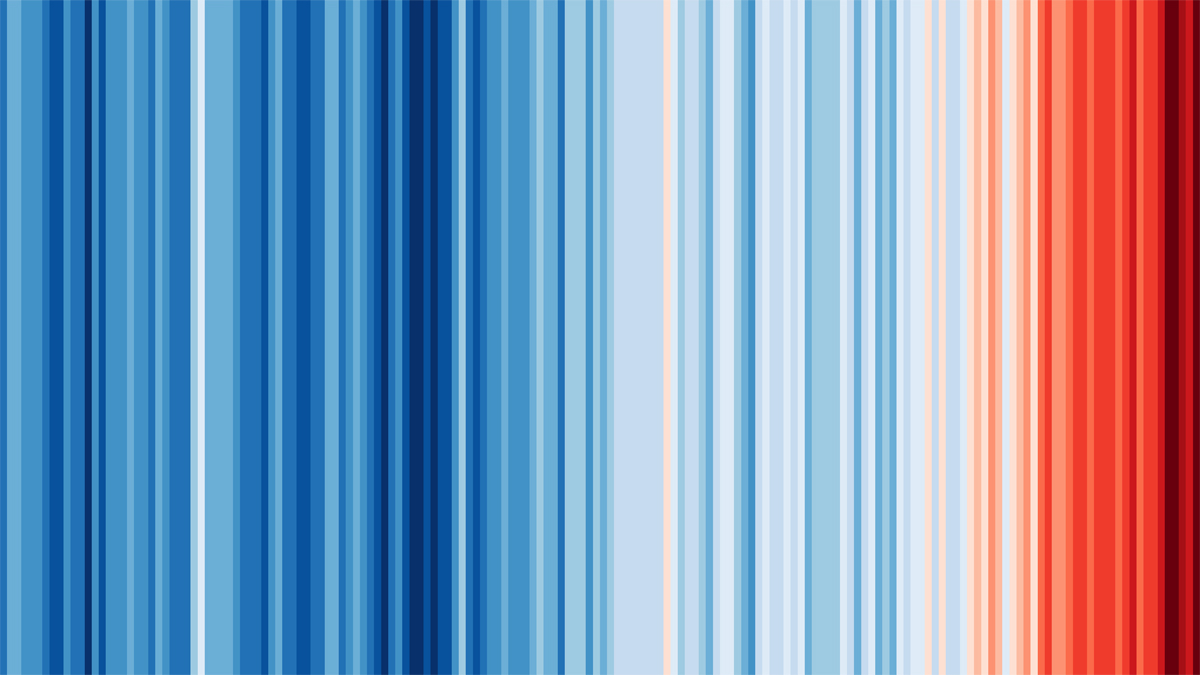

“The World changed significantly since 1973.” (p.10)

I read this book, The Privacy Fallacy: Harm and Power in the Information Economy, by Ignacio Cofone, upon my return from Warwick the past week. This is a Cambridge University Press 2023 book I had picked from their publication list after reviewing a book proposal for them. A selection made with our ERC OCEAN goals in mind, but without paying enough attention to the book table of contents, since it proved to be a Law book!

I read this book, The Privacy Fallacy: Harm and Power in the Information Economy, by Ignacio Cofone, upon my return from Warwick the past week. This is a Cambridge University Press 2023 book I had picked from their publication list after reviewing a book proposal for them. A selection made with our ERC OCEAN goals in mind, but without paying enough attention to the book table of contents, since it proved to be a Law book!

“People’s inability to assess privacy risks impact people’s behavior toward privacy because it turns the risks into uncertainty, a kind of risk that is impossible to estimate.” (p.31)

Still, this ended up being a fairly interesting read (for me) about the shortcomings of the current legal privacy laws (in various countries), since they are based on an obsolete perception that predates AIs and social media. Its main theme is that privacy is a social value that must be protected, regardless of whether or not its breach has tangible consequences. The author then argues that notions that support these laws such as the rationality of individual choices, the confusion between privacy and secrecy, the binary dichotomy between public and private, &tc., all are erroneous, hence the “fallacy” he denounces. One immediate argument for his position is the extreme imbalance of information between individuals and corporations, the former being unable to assess the whole impact of clicking on “I agree” when visiting a webpage or installing a new app. The more because the data thus gathered is pipelined to third parties. (“One’s efforts cannot scale to the number of corporations collecting and using one’s personal data”, p.93) For similar reasons, Cofone further states that the current principles based on contracts are inappropriate. Also because data harm can be collective and because companies have a strong incentive to data exploitation, hence a moral hazard.

“Inferences, relational data, and de-identified data aren’t captured by consent provisions.” (p.9)

“AI inferences worsen information overload (…) As [they] continue to grow, so will the insufficiency of our processing ability to estimate our losses.” (p.75)

As illustrated by the surrounding quotes, the statistical and machine-learning aspects of the book are few and vague, in that the additional level of privacy loss due to post-data processing is considered as a further argument for said loss to be impossible to quantify and assess, without a proper evaluation of the channels through which this can happen and without a reglementary proposal towards its control. This level of discourse makes AIs appear as omniscient methods, unfortunately.

“Inferences are invisible (…) Risks posed by inferences are impossible to anticipate because the information inferred is disproportionate to the sum of the information disclosed.” (p.49)

“The idea of probabilistic privacy loss is crucial in a world where entities (..) mostly affect our privacy by making inferences” (p.121)

The attempts at regulation such as opt-in and informed consent are then denounced as illusions—obviously so imho, even without considering the nuisance of having to click on “Reject” for each newly visited website!—. De- and re-identified data does not require anyone’s consent. Data protection rights, as of today, do not provide protection in most cases, the burden of proof residing on the privacy victims rather than the perpetrators. The book unsurprisingly offers no technical suggestion towards ensuring corporations and data brokers comply with this respect of privacy and on the opposite agrees that institutional attempts such as GDPR remain well-intended wishful thinking w/o imposing a hard-wired way of controlling the data flows, with the “need of an enforcement authority with investigating and sanctioning powers” (p.106) . The only in-depth proposal therein is pushing for stronger accountability of these corporations via a new type of liability, with a prospect of class actions (if only in countries with this judiciary possibility).

[Disclaimer about potential self-plagiarism: this post or an edited version will eventually appear in my Books Review section in CHANCE.]

The next

The next  On my last trip to Warwick, the local (RER) train I boarded broke on its way to the

On my last trip to Warwick, the local (RER) train I boarded broke on its way to the