Archive for Markov chain Monte Carlo

mostly MC [April]

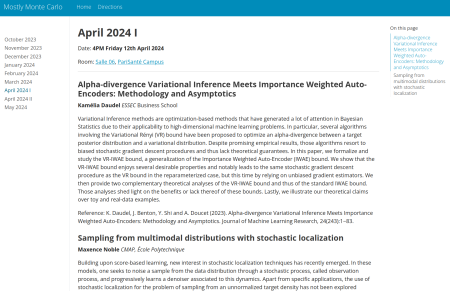

Posted in Books, Kids, Statistics, University life with tags #ERCSyG, Bayesian computational methods, Bayesian inference, denoising, generative models, Institut PR[AI]RIE, JMLR, machine learning, Markov chain Monte Carlo, MCMC, Monte Carlo methods, Monte Carlo Statistical Methods, mostly Monte Carlo seminar, multimodal target, Ocean, optimization, Paris, PariSanté campus, PSC, sampling, score-based generative models, seminar, simulation, stochastic diffusions, stochastic localization, variational autoencoders on April 5, 2024 by xi'anWelcome to MCM 2025

Posted in Statistics with tags Chicago, conference, Illinois, Illinois Institute of Technology, Lake Michigan, Markov chain Monte Carlo, MCM 2025, memory lane, Mies campus, Monte Carlo methods, probabilistic numerics, Purdue University, quasi-Monte Carlo methods, USA, West Lafayette on July 7, 2023 by xi'anNatural nested sampling

Posted in Books, Statistics, University life with tags astrostatistics, Bayesian computation, cosmostats, evidence, marginal likelihood, Markov chain Monte Carlo, MCMC, Monte Carlo Statistical Methods, Nature Methods, Nature Reviews Methods Primers, nested sampling, path sampling on May 28, 2023 by xi'an

“The nested sampling algorithm solves otherwise challenging, high-dimensional integrals by evolving a collection of live points through parameter space. The algorithm was immediately adopted in cosmology because it partially overcomes three of the major difficulties in Markov chain Monte Carlo, the algorithm traditionally used for Bayesian computation. Nested sampling simultaneously returns results for model comparison and parameter inference; successfully solves multimodal problems; and is naturally self-tuning, allowing its immediate application to new challenges.”

I came across a review on nested sampling in Nature Reviews Methods Primers of May 2022, with a large number of contributing authors, some of whom I knew from earlier papers in astrostatistics. As illustrated by the above quote from the introduction, the tone is definitely optimistic about the capacities of the method, reproducing the original argument that the evidence is the posterior expectation of the likelihood L(θ) under the prior. Which representation, while valid, is not translating into a dimension-free methodology since parameters θ still need be simulated.

“Nested sampling lies in a class of algorithms that form a path of bridging distributions and evolves samples along that path. Nested sampling stands out because the path is automatic and smooth — compression along log X by, on average, 1/𝑛at each iteration — and because along the path is compressed through constrained priors, rather than from the prior to the posterior. This was a motivation for nested sampling as it avoids phase transitions — abrupt changes in the bridging distributions — that cause problems for other methods, including path samplers, such as annealing.”

The elephant in the room is eventually processed, namely the simulation from the prior constrained to the likelihood level sets that in my experience (with, e.g., mixture posteriors) proves most time consuming. This stems from the fact that these level sets are notoriously difficult to evaluate from a given sample: all points stand within the set but they hardly provide any indication of the boundaries of saif set… Region sampling requires to construct a region that bounds the likelihood level set, which requires some knowledge of the likelihood variations to have a chance to remain efficient, incl. in cosmological applications, while regular MCMC steps require an increasing number of steps as the constraint gets tighter and tighter. For otherwise it essentially amounts to duplicating a live particle.

another first

Posted in Statistics with tags Chemical Physics Letters, history of Monte Carlo, importance sampling, John Valleau, Markov chain Monte Carlo, MCMC, Metropolis algorithm, umbrella sampling, Wilfred Keith Hastings on July 1, 2022 by xi'anA question related to the earlier post on the first importance sampling in print, about the fist Markov chain Monte Carlo in print. Again uncovered by Charly, a 1973 Chemical Physics paper by Patey and Valleau, the latter inventing umbrella sampling with Torrie at about the same time. (In a 1972 paper in the same journal with Card, Valleau uses Metropolis Monte Carlo. While Hastings, also at the University of Toronto uses Markov chain sampling.)

approximate Bayesian inference [survey]

Posted in Statistics with tags ABC, Approximate Bayesian computation, Bayesian statistics, CREST, entropy, expectation-propagation, Gibbs posterior, Langevin Monte Carlo, Laplace approximations, machine learning, Markov chain Monte Carlo, MCMC, PAC-Bayes, RIKEN, sequential Monte Carlo, special issue, survey, Tokyo, variational approximations on May 3, 2021 by xi'an In connection with the special issue of Entropy I mentioned a while ago, Pierre Alquier (formerly of CREST) has written an introduction to the topic of approximate Bayesian inference that is worth advertising (and freely-available as well). Its reference list is particularly relevant. (The deadline for submissions is 21 June,)

In connection with the special issue of Entropy I mentioned a while ago, Pierre Alquier (formerly of CREST) has written an introduction to the topic of approximate Bayesian inference that is worth advertising (and freely-available as well). Its reference list is particularly relevant. (The deadline for submissions is 21 June,)