“We use the intuition that inference corresponds to integrating a density across the manifold corresponding to the set of inputs consistent with the observed outputs.”

Following my earlier post on that paper by Matt Graham and Amos Storkey (University of Edinburgh), I now read through it. The beginning is somewhat unsettling, albeit mildly!, as it starts by mentioning notions like variational auto-encoders, generative adversial nets, and simulator models, by which they mean generative models represented by a (differentiable) function g that essentially turn basic variates with density p into the variates of interest (with intractable density). A setting similar to Meeds’ and Welling’s optimisation Monte Carlo. Another proximity pointed out in the paper is Meeds et al.’s Hamiltonian ABC.

“…the probability of generating simulated data exactly matching the observed data is zero.”

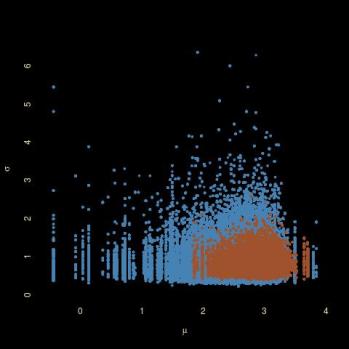

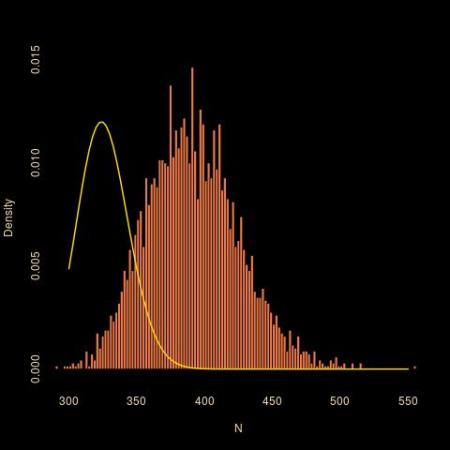

The section on the standard ABC algorithms mentions the fact that ABC MCMC can be (re-)interpreted as a pseudo-marginal MCMC, albeit one targeting the ABC posterior instead of the original posterior. The starting point of the paper is the above quote, which echoes a conversation I had with Gabriel Stolz a few weeks ago, when he presented me his free energy method and when I could not see how to connect it with ABC, because having an exact match seemed to cancel the appeal of ABC, all parameter simulations then producing an exact match under the right constraint. However, the paper maintains this can be done, by looking at the joint distribution of the parameters, latent variables, and observables. Under the implicit restriction imposed by keeping the observables constant. Which defines a manifold. The mathematical validation is achieved by designing the density over this manifold, which looks like

if the constraint can be rewritten as g⁰(u)=0. (This actually follows from a 2013 paper by Diaconis, Holmes, and Shahshahani.) In the paper, the simulation is conducted by Hamiltonian Monte Carlo (HMC), the leapfrog steps consisting of an unconstrained move followed by a projection onto the manifold. This however sounds somewhat intense in that it involves a quasi-Newton resolution at each step. I also find it surprising that this projection step does not jeopardise the stationary distribution of the process, as the argument found therein about the approximation of the approximation is not particularly deep. But the main thing that remains unclear to me after reading the paper is how the constraint that the pseudo-data be equal to the observable data can be turned into a closed form condition like g⁰(u)=0. As mentioned above, the authors assume a generative model based on uniform (or other simple) random inputs but this representation seems impossible to achieve in reasonably complex settings.