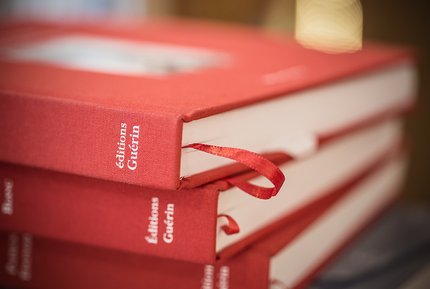

Read two more “little red” books from Éditions Guérin/Paulsen, the fantastic Chamonix editor, namely, Lénine à Chamonix by François Garde, a former Secretary-General of the Government of New-Caledonia, and Les Hallucinés (Un voyage dans les délires d’altitude), by Thomas Venin. The first book is a collection of short stories related to mountains, ranging from the realistic to the fantastic, and from good to terrible. I think in particular of the 1447 mètres story that involves a Holtanna like big wall in Iceland [good start then!], possibly the Latrabjarg cliff—although it stands at 1447 feet, not meters!, and the absurd impact of prime numbers on the failure of the climbing team. Lénine à Chamonix muses on the supposed day Vladimir Illitch “Lenin” Ulyanov spent in Chamonix in 1903, almost losing his life but adopting his alias there [which clashes with its 1902 first occurrence in publications!]. The second book is about high altitude hallucinations as told by survivors from the “death zone”. Induced by hypoxia, they lead hymalayists to see imaginary things or persons, sometimes to act against their own interest and often to die as a result. The stories are about those who survived and told about their visions. They reminded me of Abele Blanc telling us of facing the simultaneous hallucinations of two (!) partners during an attempt at Annapurna and managing to bring down one of the climbers, with the other managing on its own after a minor fall resetting his brain to the real world. Touching the limits of human abilities and the mysterious working of the brain…

Read two more “little red” books from Éditions Guérin/Paulsen, the fantastic Chamonix editor, namely, Lénine à Chamonix by François Garde, a former Secretary-General of the Government of New-Caledonia, and Les Hallucinés (Un voyage dans les délires d’altitude), by Thomas Venin. The first book is a collection of short stories related to mountains, ranging from the realistic to the fantastic, and from good to terrible. I think in particular of the 1447 mètres story that involves a Holtanna like big wall in Iceland [good start then!], possibly the Latrabjarg cliff—although it stands at 1447 feet, not meters!, and the absurd impact of prime numbers on the failure of the climbing team. Lénine à Chamonix muses on the supposed day Vladimir Illitch “Lenin” Ulyanov spent in Chamonix in 1903, almost losing his life but adopting his alias there [which clashes with its 1902 first occurrence in publications!]. The second book is about high altitude hallucinations as told by survivors from the “death zone”. Induced by hypoxia, they lead hymalayists to see imaginary things or persons, sometimes to act against their own interest and often to die as a result. The stories are about those who survived and told about their visions. They reminded me of Abele Blanc telling us of facing the simultaneous hallucinations of two (!) partners during an attempt at Annapurna and managing to bring down one of the climbers, with the other managing on its own after a minor fall resetting his brain to the real world. Touching the limits of human abilities and the mysterious working of the brain…

Cooked several dishes suggested by the New York Times (!), including a spinach risotto [good], orecchiette with fennel and sausages [great], and malai broccoli [not so great], as well as by the Guardian’s Yotam Ottolenghi’s recipes, like a yummy spinash-potatoe pie. As Fall is seeping in, went back to old classics like red cabbage Flemish style. And butternut soups, starting with our own. And a pumpkin biryani!

Read Peter Hamilton’s Salvation, with a certain reluctance to proceed as I found the stories within mostly  disconnected and of limited interest. (This came obviously as a disappointment, having enjoyed a lot Great North Road.) Unlikely I read the following volumes in the series. On the side, I heard that fantasy writer Terry Goodkind died on Sept. 17. He had written “The Sword of Truth” series, of which I read the first three volumes. (Out of 21 total!!!) While there were some qualities in the story, the setting was quite naïve (in the usual trope of an evil powerful character that need be fought at all costs) and the books carry a strong component of political conservatism as well as extensive sections of sadistic scenes…

disconnected and of limited interest. (This came obviously as a disappointment, having enjoyed a lot Great North Road.) Unlikely I read the following volumes in the series. On the side, I heard that fantasy writer Terry Goodkind died on Sept. 17. He had written “The Sword of Truth” series, of which I read the first three volumes. (Out of 21 total!!!) While there were some qualities in the story, the setting was quite naïve (in the usual trope of an evil powerful character that need be fought at all costs) and the books carry a strong component of political conservatism as well as extensive sections of sadistic scenes…

Watched Tim Burton’s 2012 Dark Shadows (terrible!) and a Taiwanese 2018 dark comedy entitled Dear Ex (誰先愛上他的) which I found rather interesting and quite original, despite the overdone antics of the mother. I even tried Tim Burton’s Sweeney Todd for a few minutes, being completely unaware this was a musical!

Þe first internal research workshop of our ERC Synergy project OCEAN is taking place in Les Houches, French Alps, this coming week with 15 researchers gathering for brain-storming on some of the themes at the core of the project, like algorithmic tools for multiple decision-making agents, along with Bayesian uncertainty quantification and Bayesian learning under constraints (scarcity, fairness, privacy). Due to the small size of the workshop (which is perfect for engaging into joint work), it could not be housed by the nearby, iconic, École de Physique des Houches but will take place instead in a local hotel.

On the leisurely side, I hope there will be enough snow left for some lunch-time ski breaks [with no bone fracture à la Adapski!] Or, else, that the running trails nearby will prove manageable.

On the leisurely side, I hope there will be enough snow left for some lunch-time ski breaks [with no bone fracture à la Adapski!] Or, else, that the running trails nearby will prove manageable.