Archive for French Alps

good morning [from] Les Houches

Posted in Mountains, pictures, Running, Travel with tags Aravid, Chamonix-Mont-Blanc, downhill skiing, French Alps, Kandahar, Les Houches, morning run, Piste Noire, sunrise, super G on April 27, 2024 by xi'an

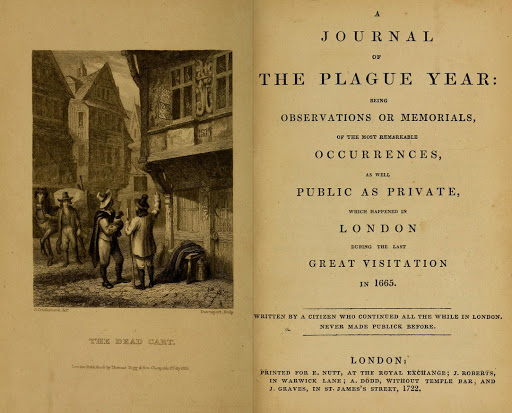

a journal of the conquest, war, [snow] famine, and death year

Posted in Books, Kids, Mountains, pictures, Running, Travel with tags Beaufort, Bernard Loiseau, bistronomie, book review, Chamonix-Mont-Blanc, COVID-19, Daniel Defoe, dhal, film review, French Alps, French cheese, Japanese TV series, Jerusalem artichoke, Journal of the Plague Year, Kinshin, Les Houches, MIchelin starred restaurant, Netflix, ninjas, novelette, parnsip, polenta, Ratatouille, Rocky Pop Hôtel, Russian invasion, Saulieu, Tampopo, teen culture, Ukraine, zombies on April 24, 2024 by xi'an Read yet another novelette by Aliette de Bodard, The citadel of weeping pearls, set in the same universe of a Viêt Nam inspired galactic civilisation, which proved somewhat equal to the previous ones, with the plus of digging into mother-daughter and sibling relations and the slight minus of involving time travel [which, surprisingly, rarely fails to annoy me]. Right level for filling the nightly wake-ups I encountered while in Les Houches, despite a reasonable amount of physical activities since I totalled 79km of running that week and a few thousand meters of positive climb.

Read yet another novelette by Aliette de Bodard, The citadel of weeping pearls, set in the same universe of a Viêt Nam inspired galactic civilisation, which proved somewhat equal to the previous ones, with the plus of digging into mother-daughter and sibling relations and the slight minus of involving time travel [which, surprisingly, rarely fails to annoy me]. Right level for filling the nightly wake-ups I encountered while in Les Houches, despite a reasonable amount of physical activities since I totalled 79km of running that week and a few thousand meters of positive climb.

Made very little cooking while in Les Houches, obviously!, although we brought back a nice collection of very local cheeses, or the obvious reason of having evening meals at the workshop hotel together with the other participants. Nothing spectacular to report on that front, except for a limited vegetarian offer, incl. failed risotto and dhal, and more than substantial portions presumably calibrated for ravenous skiers (rather than regular runners!)… But had a lunch stop on the [high]way back home at [Ratatouille’s inspiration] Bernard Loiseau‘s bistro, Loiseau des Sens (and not the Michelin Côte d’Or**!). While the dishes were made from unsophisticated ingredients, their perfect preparation really proved worth the détour (and light enough to avoid falling asleep on the remainder of the drive home!!!)

.

Watched House of Shinobi (忍びの家) on Netflix, following the paradoxes of a family of ninjas facing the modern Japan but somewhat unwillingly keeping their duties and honour code, which proved predictably cartoonesque but involved comic relief, as well as including the actors Eguchi Yōsuke (playing a similar role in the series of Kinshin films) and Miyamoto Nobuko (who played in the excellent 1980’s Tampopo). And Happiness (해피니스), yet another Korean zombie series, lengthy and easily forgotten.

Mont Blanc [jatp]

Posted in Mountains, pictures, Running, University life with tags Chamonix-Mont-Blanc, French Alps, Italian Alps, jatp, Les Houches, Mont Blanc, Savoie, summitting, the summit of the gods, workshop on April 3, 2024 by xi'an

the sky [isn’t] the limit

Posted in Mountains, pictures, Running, Travel with tags Alpine meadows, Chamonix-Mont-Blanc, clouds, French Alps, glacier, Les Bossons, Les Houches, Mont Blanc, snow, winter running on March 21, 2024 by xi'an

oceanographers in Les Houches

Posted in Books, Kids, Mountains, pictures, Running, Statistics, Travel, University life with tags Adapski, belief propagation, book review, Chamonix, CHANCE, expectation-propagation, French Alps, Lasso, Les Houches, multi-agent decision theory, Oxford University Press, sparsity, summer school on March 9, 2024 by xi'anÞe first internal research workshop of our ERC Synergy project OCEAN is taking place in Les Houches, French Alps, this coming week with 15 researchers gathering for brain-storming on some of the themes at the core of the project, like algorithmic tools for multiple decision-making agents, along with Bayesian uncertainty quantification and Bayesian learning under constraints (scarcity, fairness, privacy). Due to the small size of the workshop (which is perfect for engaging into joint work), it could not be housed by the nearby, iconic, École de Physique des Houches but will take place instead in a local hotel.

On the leisurely side, I hope there will be enough snow left for some lunch-time ski breaks [with no bone fracture à la Adapski!] Or, else, that the running trails nearby will prove manageable.

On the leisurely side, I hope there will be enough snow left for some lunch-time ski breaks [with no bone fracture à la Adapski!] Or, else, that the running trails nearby will prove manageable.