The next One World ABC webinar is this Thursday, the 2nd May, at 9am UK time, with Francesca Crucinio (King’s College London, formerly CREST and even more formerly Warwick) presenting

The next One World ABC webinar is this Thursday, the 2nd May, at 9am UK time, with Francesca Crucinio (King’s College London, formerly CREST and even more formerly Warwick) presenting

“A connection between Tempering and Entropic Mirror Descent”.

a joint work with Nicolas Chopin and Anna Korba (both from CREST) whose abstract follows:

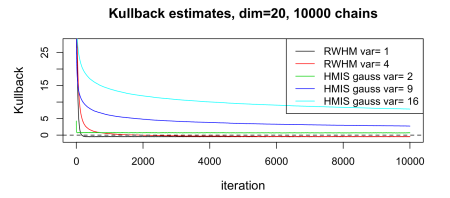

This work explores the connections between tempering (for Sequential Monte Carlo; SMC) and entropic mirror descent to sample from a target probability distribution whose unnormalized density is known. We establish that tempering SMC corresponds to entropic mirror descent applied to the reverse Kullback-Leibler (KL) divergence and obtain convergence rates for the tempering iterates. Our result motivates the tempering iterates from an optimization point of view, showing that tempering can be seen as a descent scheme of the KL divergence with respect to the Fisher-Rao geometry, in contrast to Langevin dynamics that perform descent of the KL with respect to the Wasserstein-2 geometry. We exploit the connection between tempering and mirror descent iterates to justify common practices in SMC and derive adaptive tempering rules that improve over other alternative benchmarks in the literature.

In connection with the

In connection with the