A JMLR paper by Papamakarios, Sterratt, and Murray (Edinburgh), first presented at the AISTATS 2019 meeting, on a new form of likelihood-free inference, away from non-zero tolerance and from the distance-based versions of ABC, following earlier papers by Iain Murray and co-authors in the same spirit. Which I got pointed to during the ABC workshop in Vancouver. At the time I had no idea as to autoregressive flows meant. We were supposed to hold a reading group in Paris-Dauphine on this paper last week, unfortunately cancelled as a coronaviral precaution… Here are some notes I had prepared for the meeting that did not take place.

A JMLR paper by Papamakarios, Sterratt, and Murray (Edinburgh), first presented at the AISTATS 2019 meeting, on a new form of likelihood-free inference, away from non-zero tolerance and from the distance-based versions of ABC, following earlier papers by Iain Murray and co-authors in the same spirit. Which I got pointed to during the ABC workshop in Vancouver. At the time I had no idea as to autoregressive flows meant. We were supposed to hold a reading group in Paris-Dauphine on this paper last week, unfortunately cancelled as a coronaviral precaution… Here are some notes I had prepared for the meeting that did not take place.

“A simulator model is a computer program, which takes a vector of parameters θ, makes internal calls to a random number generator, and outputs a data vector x.”

Just the usual generative model then.

“A conditional neural density estimator is a parametric model q(.|φ) (such as a neural network) controlled by a set of parameters φ, which takes a pair of datapoints (u,v) and outputs a conditional probability density q(u|v,φ).”

Less usual, in that the outcome is guaranteed to be a probability density.

“For its neural density estimator, SNPE uses a Mixture Density Network, which is a feed-forward neural network that takes x as input and outputs the parameters of a Gaussian mixture over θ.”

In which theoretical sense would it improve upon classical or Bayesian density estimators? Where are the error evaluation, the optimal rates, the sensitivity to the dimension of the data? of the parameter?

“Our new method, Sequential Neural Likelihood (SNL), avoids the bias introduced by the proposal, by opting to learn a model of the likelihood instead of the posterior.”

I do not get the argument in that the final outcome (of using the approximation within an MCMC scheme) remains biased since the likelihood is not the exact likelihood. Where is the error evaluation? Note that in the associated Algorithm 1, the learning set is enlarged on each round, as in AMIS, rather than set back to the empty set ∅ on each round.

“…given enough simulations, a sufficiently flexible conditional neural density estimator will eventually approximate the likelihood in the support of the proposal, regardless of the shape of the proposal. In other words, as long as we do not exclude parts of the parameter space, the way we propose parameters does not bias learning the likelihood asymptotically. Unlike when learning the posterior, no adjustment is necessary to account for our proposing strategy.”

This is a rather vague statement, with the only support being that the Monte Carlo approximation to the Kullback-Leibler divergence does converge to its actual value, i.e. a direct application of the Law of Large Numbers! But an interesting point I informally made a (long) while ago that all that matters is the estimate of the density at x⁰. Or at the value of the statistic at x⁰. The masked auto-encoder density estimator is based on a sequence of bijections with a lower-triangular Jacobian matrix, meaning the conditional density estimate is available in closed form. Which makes it sounds like a form of neurotic variational Bayes solution.

The paper also links with ABC (too costly?), other parametric approximations to the posterior (like Gaussian copulas and variational likelihood-free inference), synthetic likelihood, Gaussian processes, noise contrastive estimation… With experiments involving some of the above. But the experiments involve rather smooth models with relatively few parameters.

The paper also links with ABC (too costly?), other parametric approximations to the posterior (like Gaussian copulas and variational likelihood-free inference), synthetic likelihood, Gaussian processes, noise contrastive estimation… With experiments involving some of the above. But the experiments involve rather smooth models with relatively few parameters.

“A general question is whether it is preferable to learn the posterior or the likelihood (…) Learning the likelihood can often be easier than learning the posterior, and it does not depend on the choice of proposal, which makes learning easier and more robust (…) On the other hand, methods such as SNPE return a parametric model of the posterior directly, whereas a further inference step (e.g. variational inference or MCMC) is needed on top of SNL to obtain a posterior estimate”

A fair point in the conclusion. Which also mentions the curse of dimensionality (both for parameters and observations) and the possibility to work directly with summaries.

Getting back to the earlier and connected Masked autoregressive flow for density estimation paper, by Papamakarios, Pavlakou and Murray:

“Viewing an autoregressive model as a normalizing flow opens the possibility of increasing its flexibility by stacking multiple models of the same type, by having each model provide the source of randomness for the next model in the stack. The resulting stack of models is a normalizing flow that is more flexible than the original model, and that remains tractable.”

Which makes it sound like a sort of a neural network in the density space. Optimised by Kullback-Leibler minimisation to get asymptotically close to the likelihood. But a form of Bayesian indirect inference in the end, namely an MLE on a pseudo-model, using the estimated model as a proxy in Bayesian inference…

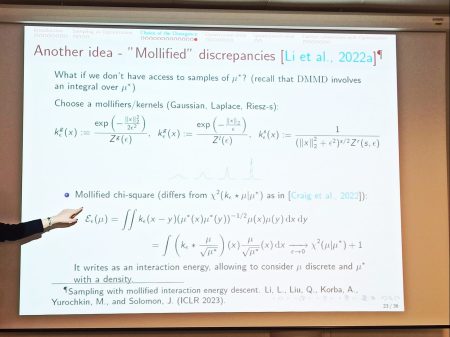

Yesterday, which proved an unseasonal bright, warm, day, I biked (with a new wheel!) to the east of Paris—in the Gare de Lyon district where I lived for three years in the 1980’s—to attend a Mokaplan seminar at INRIA Paris, where Anna Korba (CREST, to which I am also affiliated) talked about sampling through optimization of discrepancies.

Yesterday, which proved an unseasonal bright, warm, day, I biked (with a new wheel!) to the east of Paris—in the Gare de Lyon district where I lived for three years in the 1980’s—to attend a Mokaplan seminar at INRIA Paris, where Anna Korba (CREST, to which I am also affiliated) talked about sampling through optimization of discrepancies. This proved a most formative hour as I had not seen this perspective earlier (or possibly had forgotten about it). Except through some of the talks at the Flatiron Institute on Transport, Diffusions, and Sampling last year. Incl. Marilou Gabrié’s and Arnaud Doucet’s.

This proved a most formative hour as I had not seen this perspective earlier (or possibly had forgotten about it). Except through some of the talks at the Flatiron Institute on Transport, Diffusions, and Sampling last year. Incl. Marilou Gabrié’s and Arnaud Doucet’s. The concept behind remains attractive to me, at least conceptually, since it consists in approximating the target distribution, known up to a constant (a setting I have always felt standard simulation techniques was not exploiting to the maximum) or through a sample (a setting less convincing since the sample from the target is already there), via a sequence of (particle approximated) distributions when using the discrepancy between the current distribution and the target or gradient thereof to move the particles. (With no randomness in the Kernel Stein Discrepancy Descent algorithm.)

The concept behind remains attractive to me, at least conceptually, since it consists in approximating the target distribution, known up to a constant (a setting I have always felt standard simulation techniques was not exploiting to the maximum) or through a sample (a setting less convincing since the sample from the target is already there), via a sequence of (particle approximated) distributions when using the discrepancy between the current distribution and the target or gradient thereof to move the particles. (With no randomness in the Kernel Stein Discrepancy Descent algorithm.) Ana Korba spoke about practically running the algorithm, as well as about convexity properties and some convergence results (with mixed performances for the Stein kernel, as opposed to SVGD). I remain definitely curious about the method like the (ergodic) distribution of the endpoints, the actual gain against an MCMC sample when accounting for computing time, the improvement above the empirical distribution when using a sample from π and its ecdf as the substitute for π, and the meaning of an error estimation in this context.

Ana Korba spoke about practically running the algorithm, as well as about convexity properties and some convergence results (with mixed performances for the Stein kernel, as opposed to SVGD). I remain definitely curious about the method like the (ergodic) distribution of the endpoints, the actual gain against an MCMC sample when accounting for computing time, the improvement above the empirical distribution when using a sample from π and its ecdf as the substitute for π, and the meaning of an error estimation in this context.

“We are interested in the question of how we can build differentially-private algorithms

“We are interested in the question of how we can build differentially-private algorithms