“We believe that an elicitation method should support elicitation both in the parameter and observable space, should be model-agnostic, and should be sample-efficient since human effort is costly.”

Petrus Mikkola et al. arXived a long paper on prior elicitation addressing the (most relevant) question: Why are we not widely use prior elicitation? With a massive bibliography that could be (partly) commented (and corrected as some references are incomplete, as eg my book chapter on priors!). I think the paper would make a terrific discussion paper.

The absence of a general procedure for prior elicitation is indeed hindering the adoption of Bayesian methods outside our core community and is thus eventually detrimental to their wider development. It also carries the dangers of misled or misleading prior choices. The authors put forward the absence of “software that integrates well with the current probabilistic programming tools used for other parts of the modelling workflow.” This requires setting principles that avoid “just-press-key” solutions. (Aside: This reminds me of my very first prospective PhD student, who was then working in a startup [although the name was not yet in use in the early 1990’s!] and had build such a software in a discretised, low dimension, conjugate prior, environment by returning a form of decision-theoretic impact of the chosen hyperparameters. He alas aborted his PhD attempt due to the short-term pressing matters in the under-staffed company…)

“We inspect prior elicitation from the perspectives of (1) properties of the prior distribution itself, (2) the model family and the prior elicitation method’s dependence on it, (3) the underlying elicitation space, (4) how the method interprets the information provided by the expert, (5) computation, (6) the form and quantity of interaction with the expert(s), and (7) the assumed capability of the expert (…)”

Prior elicitation is indeed a delicate balance between incorporating expert opinion(s) and avoiding over-standardisation. In my limited experience, experts tend to be over-confident about their own opinion and unwilling to attach uncertainty to their assessments. Even when being inconsistent. When several experts are involved (as, very briefly, in Section 3.6), building a common prior quickly becomes a challenge, esp. if their interests (or utility functions) diverge. As illustrated in the case of the whaling commission analysed by Adrian Raftery in the late 1990’s. (The above quote involves a single expert.) Actually, I dislike the term expert altogether, as it comes without any grading of the reliability of the person. To hit (!) at an early statement in the paper (p.5), should the prior elicitation always depend on the (sampling) model, as experts may ignore or misapprehend the model? The posterior already accounts for the likelihood and the parameter may pre-exist wrt the model, as eg cosmological constants or vaccine efficiency… In a sense, the model should be involved as little as possible in the elicitation as the expert could confuse her beliefs about the parameter with those about the accuracy of the model. (I realise this is not necessarily a mainstream position as illustrated by this paper by Andrew and friends!)

To hit (!) at an early statement in the paper (p.5), should the prior elicitation always depend on the (sampling) model, as experts may ignore or misapprehend the model? The posterior already accounts for the likelihood and the parameter may pre-exist wrt the model, as eg cosmological constants or vaccine efficiency… In a sense, the model should be involved as little as possible in the elicitation as the expert could confuse her beliefs about the parameter with those about the accuracy of the model. (I realise this is not necessarily a mainstream position as illustrated by this paper by Andrew and friends!)

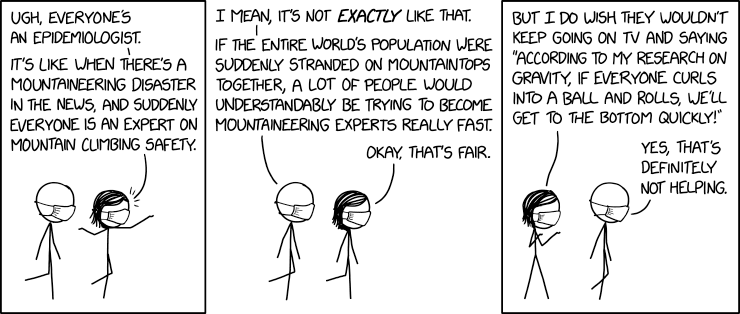

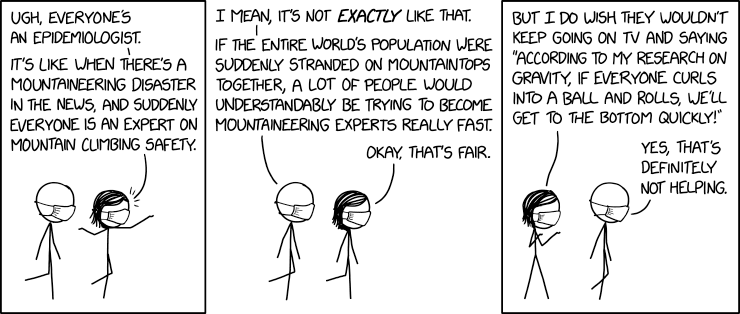

And isn’t the first stumbling block the inability of most to represent one’s prior knowledge in probabilistic terms? Innumeracy is a shared shortcoming in the general population (and since everyone’s an expert!), as repeatedly demonstrated since the start of the Covid-19 pandemic. (See also the above point about inconsistency. Accounting for such inconsistencies in a Bayesian way is a natural answer, albeit requiring the degree of expertise and reliability to be tested.)

Is prior elicitation feasible beyond a few dimensions? Even when using the constrictive tool of copulas one hits a wall after a few dimensions, assuming the expert is willing to set a prior correlation matrix. Most of the methods described in Section 3.1 only apply to textbook examples. In their third dimension (!), the authors mention neural network parameters but later fail to cover this type of issue. (This was the example I had in mind indeed.) And they move from parameter space to observable space. Distinguishing predictive elicitation from observational elicitation, the former being what I would have suggested from scratch. Obviously, the curse of dimensionality strikes again unless one considers summary statistics (like in ABC).

While I am glad conjugate priors do not get the lion’s share, using as in Section 3.3.. non-parametric or machine learning solutions to construct the prior sounds unrealistic. (And including maximum entropy priors into that category seems wrong since they are definitely parametric.)

The proposed Bayesian treatment of the expert’s “data” (Section 4.1) is rational but requires an additional model construct to link the expert’s data with the parameter to reach a Bayes formula like (4.1). Plus a primary prior (which could then be one of the reference priors.) Reducing the expert’s input to imaginary observations may prove too narrow, though. The notion of an iterative elicitation is most appealing and its sequential aspect may not be particularly problematic in opposition to posteriors relying on using the data twice or more. I am much less buying the hierarchical construct of Section 4.3 because they imply a return to conjugate priors and hyperpriors, are not necessarily correctly understood by experts, do not always cater to observational elicitation, and are not an answer to high-dimension challenges.

Given the state of the art, it sounds like we are still far from seeing prior elicitation as a natural part of Bayesian software and probabilistic programming. Even when using a modular, model-agnostic strategy. But this is most certainly a worthy prospect!

Quiz #1: How does Bayes sufficiency [which preserves the posterior density] differ from sufficiency [which preserves the likelihood function]?

The paper runs a fairly extensive test of the above features, concluding that “the ABC optimized posteriors are consistent across multiple initializations and that the output is determined by differences in the underlying model generating the given data.” Concerning model comparison, the authors mix the ABC Bayes factor with a post-hoc analysis of divergence to discriminate against overfitting. And mention the potential

The paper runs a fairly extensive test of the above features, concluding that “the ABC optimized posteriors are consistent across multiple initializations and that the output is determined by differences in the underlying model generating the given data.” Concerning model comparison, the authors mix the ABC Bayes factor with a post-hoc analysis of divergence to discriminate against overfitting. And mention the potential